AMD Instinct™ MI300X Platform

Earn 16,999 points when you buy me!

The AMD Instinct™ MI300X platform is designed to deliver exceptional performance for AI and HPC.

Earn 16,999 points when you buy me!

The AMD Instinct™ MI300X platform is designed to deliver exceptional performance for AI and HPC.

AMD Instinct™ MI300 Series accelerators are uniquely well-suited to power even the most demanding AI and HPC workloads, offering exceptional compute performance, large memory density, high bandwidth memory, and support for specialized data formats.

AMD Instinct MI300 Series accelerators are built on AMD CDNA™ 3 architecture, which offers Matrix Core Technologies and support for a broad range of precision capabilities—from the highly efficient INT8 and FP8 (including sparsity support for AI), to the most demanding FP64 for HPC.

AMD Instinct MI300X Series accelerators are designed to deliver leadership performance for Generative AI workloads and HPC applications.

304 GPU Compute Units

192 GB HBM3 Memory

5.3 TB/s Peak Theoretical Memory Bandwidth

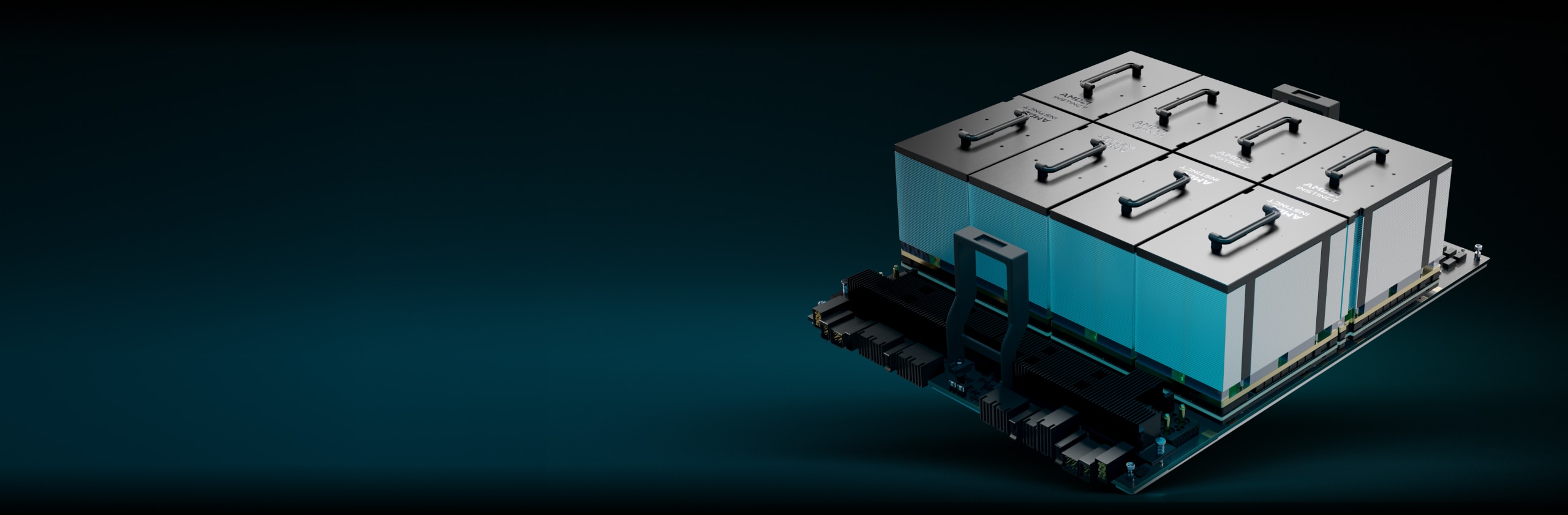

The AMD Instinct MI300X Platform integrates 8 fully connected MI300X GPU OAM modules onto an industry-standard OCP design via 4th-Gen AMD Infinity Fabric™ links, delivering up to 1.5TB HBM3 capacity for low-latency AI processing. This ready-to-deploy platform can accelerate time-to-market and reduce development costs when adding MI300X accelerators into existing AI rack and server infrastructure.

8 MI300X GPU OAM modules

1.5 TB Total HBM3 Memory

42.4 TB/s Peak Theoretical Aggregate Memory Bandwidth

AMD Instinct MI300A accelerated processing units (APUs) combine the power of AMD Instinct accelerators and AMD EPYC™ processors with shared memory to enable enhanced efficiency, flexibility, and programmability. They are designed to accelerate the convergence of AI and HPC, helping advance research and propel new discoveries.

Offers approximately 2.6X the HPC workload performance per watt using FP32 compared to AMD MI250X accelerators⁷

AMD Instinct accelerators power some of the world’s top supercomputers, including Lawrence Livermore National Laboratory’s El Capitan system. See how this two-Exascale supercomputer will use AI to run first-of-its-kind simulations and advance scientific research.

Product Basics

Name AMD Instinct™ MI300X Platform

Family Instinct

Series Instinct MI300 Series

Form Factor Instinct Platform (UBB 2.0)

GPUs 8x Instinct MI300X OAM

Dimensions 417mm x 655mm

Launch Date 12/06/2023

General Specifications

Total Memory 1.5TB HBM3

Memory Bandwidth 5.3 TB/s Per OAM

Infinity Architecture 4th Generation

Bus Type PCIe® Gen 5 (128 GB/s)

Total Aggregate Bi-directional I/O Bandwidth (Peer-to-Peer) 896 GB/s

Warranty 3 Year Limited

AI Performance

Total Theoretical Peak FP8 Performance 20.9 PFLOPs

Total Theoretical Peak FP8 Performance with Structured Sparsity 41.8 PFLOPS

Total Theoretical Peak TF32 Performance 5.2 PFLOPs

Total Theoretical Peak TF32 Performance with Structured Sparsity 10.5 PFLOPs

Total Theoretical Peak FP16 Performance 10.5 PFLOPs

Total Theoretical Peak FP16 Performance with Structured Sparsity 20.9 PFLOPs

Total Theoretical Peak bfloat16 Performance 10.5 PFLOPs

Total Theoretical Peak bfloat16 Performance with Structured Sparsity 20.9 PFLOPs

Total Theoretical Peak INT8 Performance 20.9 POPs

Total Theoretical Peak INT8 Performance with Structured Sparsity 41.8 POPs

HPC Performance

Total Theoretical Peak Double Precision Matrix (FP64) Performance 1.3 PFLOPs

Total Theoretical Peak Double Precision (FP64) Performance 653.6 TFLOPs

Total Theoretical Peak Single Precision Matrix (FP32) Performance 1.3 PFLOPs

Total Theoretical Peak Single Precision (FP32) Performance 1.3 PFLOPs